RULE BASED LEARNING:

Rule-based artificial intelligence (AI) systems use a set of predefined rules to make decisions or perform tasks. These rules are typically encoded by humans and are based on expert knowledge of the domain

in which the system is operating. Rule-based systems are good at handling specific, well-defined problems and can make decisions quickly, but they can be inflexible and may not be able to handle exceptions or new situations.

Learning-based artificial intelligence (AI) systems use machine learning algorithms to improve their performance over time. These systems learn from data, allowing them to adapt and improve their decision-making abilities as more data becomes available. Learning-based systems can handle more complex, dynamic problems and can improve their performance over time. However, they may require large amounts of data to train and can be more computationally expensive than rule-based systems.

There are different types of learning models. The most common types of learning models used are:

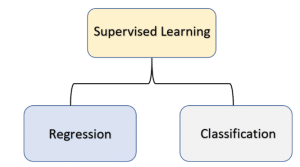

- Supervised Learning: Supervised learning is a type of machine learning where a model is trained on a labeled dataset to make predictions or decisions. The goal of supervised learning is to learn a mapping from input features to output labels, based on the examples provided in the training data. In supervised learning, the training dataset is composed of pairs of input features and corresponding output labels. The model is trained to learn the relationship between the input and output, and then it is tested on unseen data to evaluate its performance. There are several types of supervised learning algorithms, including:

- Classification: The goal is to predict a discrete label or class for a given input. Examples include image classification, spam detection, and weather forecasting.

- Regression: The goal is to predict a continuous value for a given input. Examples include stock price prediction, weather forecasting, and sales forecasting.

Supervised learning is widely used in many application domains, such as natural language processing, computer vision, speech recognition, and many more. As the model is trained on labeled data, it can be more accurate, compared to unsupervised learning. However, it requires a large amount of labeled data, which may be expensive and time-consuming to collect.

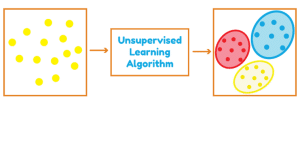

- Unsupervised Learning: Unsupervised learning is a type of machine learning where a model is trained on an unlabeled dataset to discover patterns or structures in the data. Unlike supervised learning, there are no specific input-output pairs provided in the training data; the model is instead given the task of finding patterns or relationships in the data. There are several types of unsupervised learning algorithms, including:

-

- Clustering: The goal is to group similar data points together. Examples include grouping customers based on their buying habits, grouping images based on their content, and grouping genes based on their expression levels.

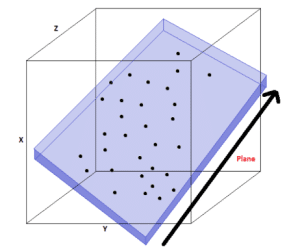

- Dimensionality reduction: The goal is to reduce the number of features in the data while preserving as much information as possible. Examples include reducing the number of variables in a dataset, compressing images, and visualizing high-dimensional data.

- Anomaly detection: The goal is to identify data points that are different from the rest of the dataset. Examples include identifying fraudulent transactions, detecting defective items, and detecting errors in sensor data. Association rule learning: The goal is to discover relationships between variables in a dataset. Examples include finding patterns in a customer’s shopping cart, discovering the relationships between genes, and identifying the factors that influence a customer’s behavior.

Unsupervised learning is useful in cases where labeled data is not available or is too expensive to acquire. It can be used for exploratory data analysis, anomaly detection, and for preprocessing the data before applying supervised learning. It can also be used for feature extraction, compression, and visualization. However, it may require more computational power and can be more complex to interpret than supervised learning.

Clustering

Dimensionality Reduction -

- Reinforcement Learning: Reinforcement learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with its environment and receiving feedback in the form of rewards or penalties. The agent learns to optimize its behavior over time to maximize the cumulative reward. The RL process can be broken down into three main components:

- The environment: The environment is the external system with which the agent interacts. It receives the actions from the agent and returns observations and rewards.

- The reward: The reward is a scalar value that the agent receives from the environment as feedback. The agent’s goal is to maximize the cumulative reward over time.

- The reward: The reward is a scalar value that the agent receives from the environment as feedback. The agent’s goal is to maximize the cumulative reward over time.

- RL is different from supervised learning because the agent does not have access to labeled training data. Instead, it must explore the environment and learn from the rewards it receives. RL is also different from unsupervised learning because the agent has a goal or objective to achieve.RL has been successfully applied to a wide range of problems, such as robotics, game-playing, recommendation systems, and control systems. It is also used in many real-world applications, such as self-driving cars, energy management systems, and healthcare systems.The agent: The agent is the decision-maker, it receives observations of the environment, and it selects actions to take.The environment: The environment is the external system with which the agent interacts. It receives the actions from the agent and returns observations and rewards.

Are Computers Intelligent?

After attempting the previous quiz, you would have realized the answer to this question. Let’s have a look at it again:

Computers are used to:

- Store information in their memory like documents, images, and videos, which you can access immediately.

- Run programs that interact with the user to help solve his problems like going calculations, etc.

- Play games.

- Watch movies and videos.

- Surf on the Internet.

In all the above examples, the task is defined and the computer runs programs to get the result. E.g., while watching movies, the computer reads the file from the hard disk and displays it on the screen using a video player.

This is not termed intelligence because there is no learning process involved in this. Computers are just following some set of predefined instructions.

But when computers are equipped with a program that learns and acts based on their environment, they can be termed as intelligent.

Let’s see some examples:

- Self Driving Cars: They can recognize their environment and act accordingly.

- Emotion Identifiers: They can identify the emotion of human using their knowledge.

- AI Bots In-Game: They can play the game as if a human is playing.

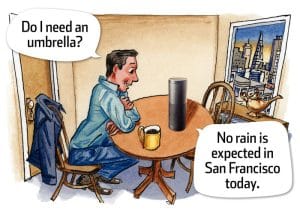

- Alexa or Homepod (Siri): It recognizes what you say and reacts accordingly.

In all of the above cases, the computer uses its knowledge to get information and act accordingly.

Artificial Intelligence – Definition

Artificial Intelligence is the study and design of intelligent agents (computers) which have the ability to analyze the environments and produce actions which maximize success.

A computer is termed as intelligent if it has the ability for gathering information, analyze it to make decisions, and act to complete a task automatically with very little to no human intervention.

Advantage of AI

There are many advantages that an AI machine has over humans. Some of them are:

- Speed of execution: Computers have better memories. They can be fed a large amount of information and can retrieve it almost instantaneously. While a doctor makes a diagnosis in ~10 minutes, an AI system can make a million in the same duration.

- They are not lazy: Computers don’t require sleep the way humans do. They can calculate, analyze, and perform tasks tirelessly and round the clock without wearing out.

- Accuracy: Computers have a very high accuracy in some tasks.

- Less Biased: Computers are not affected or influenced by emotions, feelings, wants, needs, and other factors that often cloud our judgment and intelligence.

| ARTIFICIAL INTELLIGENCE | HUMAN INTELLIGENCE |

|---|---|

| Created by human intelligence | Evolutionary process |

| Process information faster | Slower |

| Highly objective | May be subjective |

| More Accurate | Maybe less accurate |

| Cannot adapt to changes well | Easily adapt to changes |

| Cannot multitask that well | Easily multitask |

| Below average social skills | Better social skills on average |

| Optimization | Innovation |

| Cheap | Expensive |

| Biased | Biased |

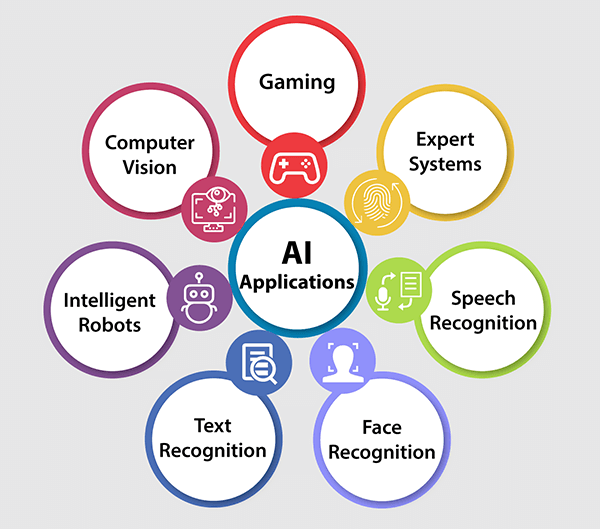

AI has many applications, which continue to grow by the day. Some of them are:

Computer Vision

AI is used in many popular object recognition systems like self-driving cars, security systems, industrial robots, etc. In this example, the robot identifies the position of the pancakes and segregate using a robotic arm.

Face Recognition

AI is widely used to detect and recognize faces from images. Facebook uses it to identify people in photos and tag them.

Gaming

AI plays an important role in helping a machine think of a large number of possible positions based on deep knowledge in strategic games such as chess or PUBG. An AI system called AlphaZero taught itself from scratch how to master the games of Chess, Shogi and Go.

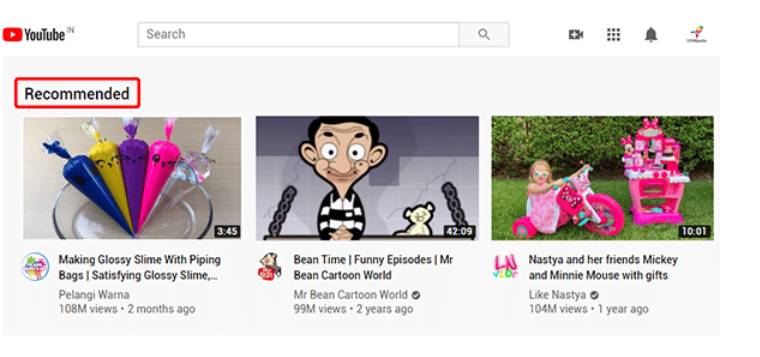

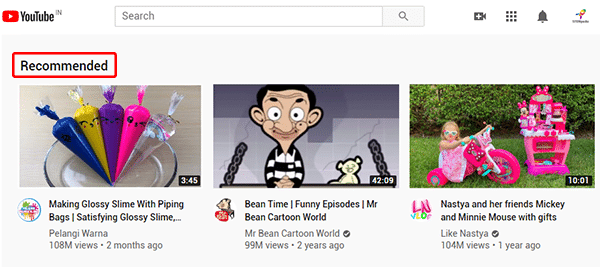

Expert Systems

Machine or software that imitates the decision-making ability of humans and uses it to provide explanations and advice to the users, e.g. Youtube uses it to recommend you new videos.

Speech Recognition

Some AI-based speech recognition systems can ‘hear’ others, ‘express’ in the form of speech and understand what a person tells it, e.g., Siri, Alexa, and Google Assistant.

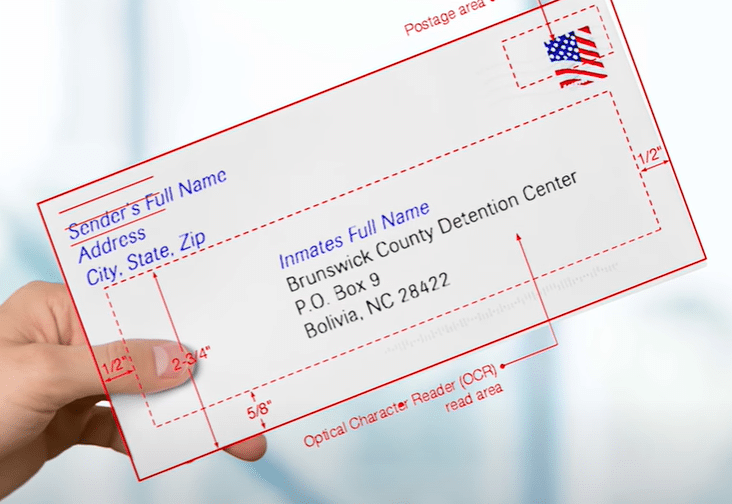

Text Recognition from Image

The handwriting recognition software reads the text written on paper and recognizes the shape of the letters and converts them into editable text.

Intelligent Robots

These robots can perform the instructions given by a human in an interactive manner.

Conclusion

In this topic, we learned how to identify which computer is intelligent and which is not and what Artificial Intelligence is. We also saw what advantages AI and machines, in general, have over humans.

In the next topic, We will see some of these in detail in the upcoming lesson.